Technology

Machine Learning

History Scene

Music 01

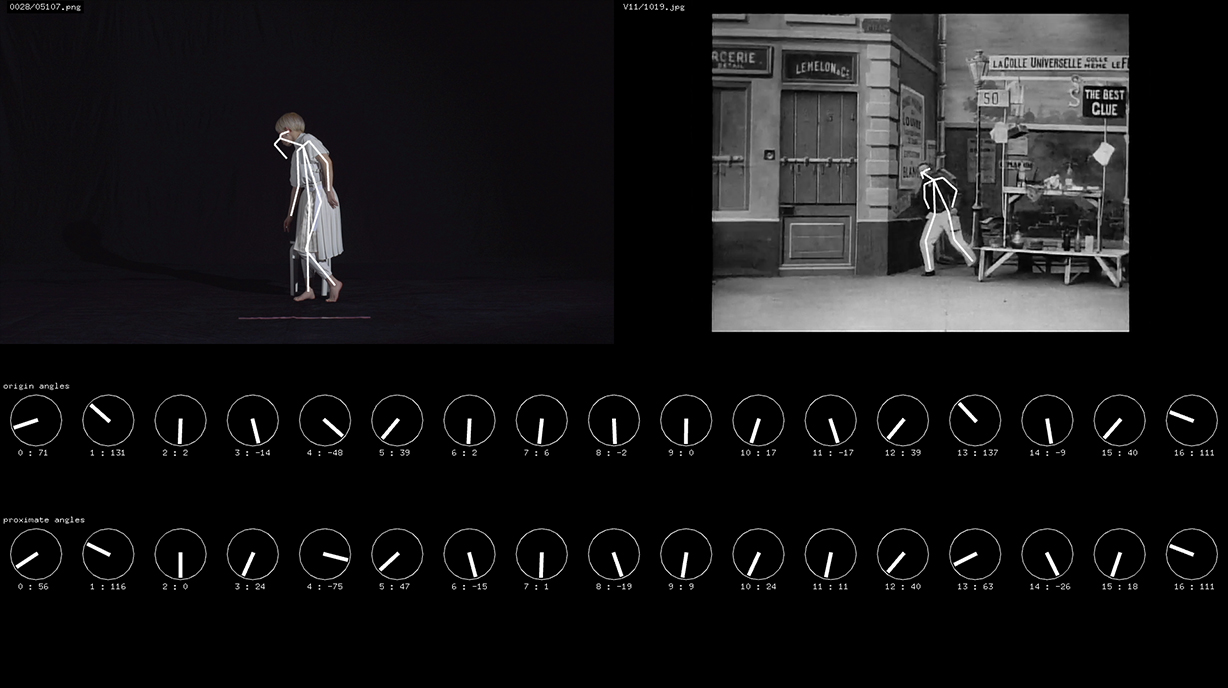

Using Openpose, we analyzed publicly available stage footage and poses from movie scenes, collected pose data, and developed a neighborhood search system that analyzes that data and pose data obtained from analyzing the dancer's movements. Drawing from the actual choreography footage for this piece, we attempted to create a production utilizing video material with the closest pose on a per-frame level.

1 audience scene

Music 09

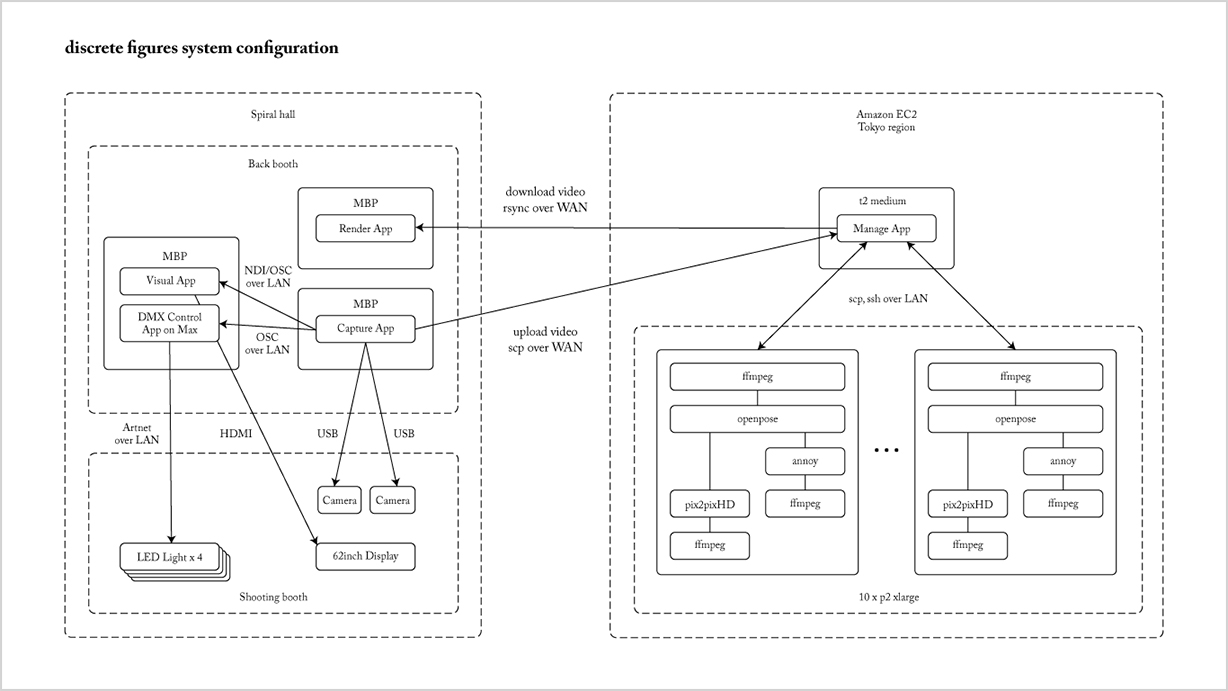

We set up a booth in the venue lobby and filmed the audience. Analyzing the participants' clothing characteristics and movements on multiple remote servers right until the performance, we managed to feature the audience as dancers using that analytical data and motion data from the ELEVENPLAY dancers.

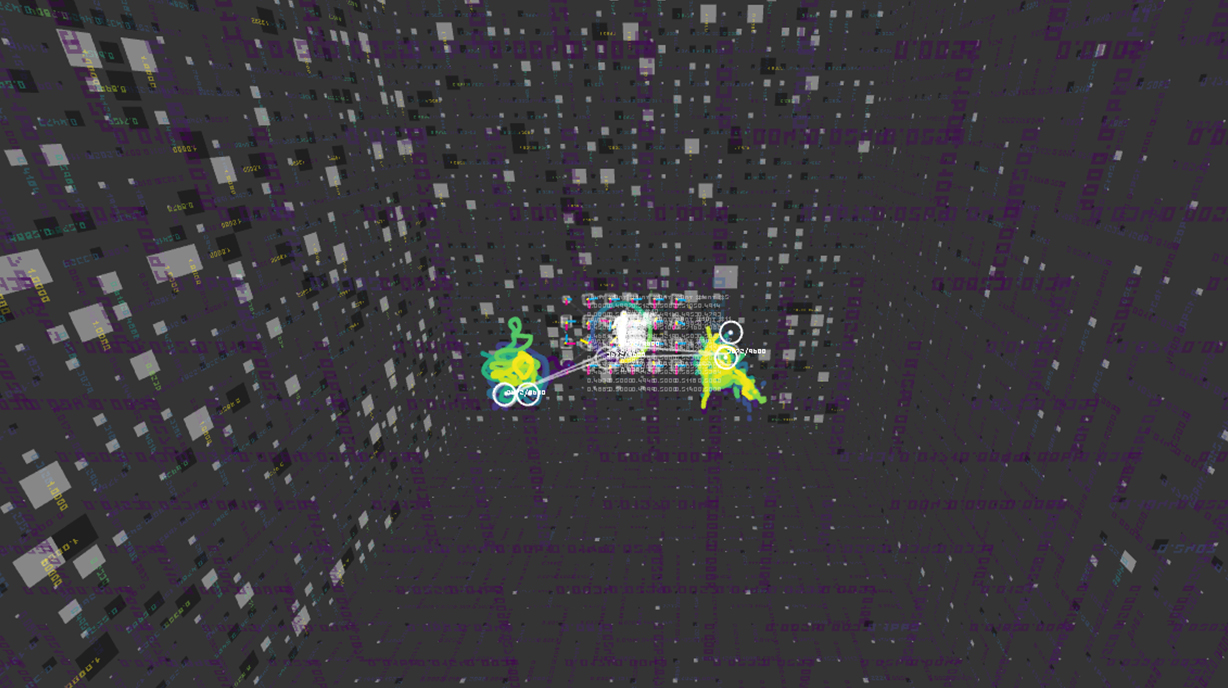

2 dimentionality reduction scene

Music 09

Using dimension reduction techniques, we converted the motion data to two-dimensional and three-dimensional ones and visualized it.

AI Dancer scene

Music 10

We were interested in dance itself, on the different types of dancers and styles, or how the musical beats connect to improvizational dances. To explore further we worked together with Parag Mital to create a network called dance2dance:

https://github.com/pkmital/dance2dance

This network is based on Google's seq2seq architecture. It is similar to char-rnn in that it is a neural network architecture that can be used for sequential modeling.https://google.github.io/seq2seq/https://github.com/karpathy/char-rnn

Using the motion capture system, approximately 2.5 hours worth of dance data was captured about 40 times at 60 fps. Each session involved the dancers improvizing under 11 themes including joyful, angry, sad, fun, robot, sexy, junkie, chilling, bouncy, wavy, swingy. To maintain a constant flow, the dancers were given a beat of 120 bpm.

Background movie

This time we generated the background movie with “StyleGAN”, which was introduced in the following paper “A Style-Based Generator Architecture for Generative Adversarial Networks” by NVIDIA Research.

http://stylegan.xyz/paper

“StyleGAN” became open source. The code is available on GitHub.

https://github.com/NVlabs/stylegan

We trained this “StyleGAN” on NVIDIA DGX Station using the data we had captured from dance performance.

https://www.nvidia.com/ja-jp/data-center/dgx-station/

Hardware

Drone

For hardware, we used five palm-sized microdrones. Due to its small size, it is safer and more mobile compared to older models. Because of its small body, it provides a visual effect as if a light ball is floating on stage. The drones' positions are measured externally via motion capture system. They are controlled in real time by 2.4 GHz spectrum wireless communication. The drone movements are produced with motion capture data that has already been analyzed and generated.

Frame

The frame serves an important role in projection mapping onto the half screen and AR synthesis. It contains seven infra-red LEDs, built-in batteries, and the whole structure is recognized as a rigid body under the motion capture system. Visible to the naked eye, retroflective markers are usually not that suitable for stage props use. However, we designed and developed a system using infrared LEDs and diffusive reflectors that allow for stable tracking invisible to the naked eye.